Artificial intelligence blunders are warnings of potential risks

date

Feb 26, 2024

slug

2024-artificial-intelligence-blunders-are-warnings-of-potential-risks

status

Published

tags

Artificial intelligence

disinformation

diversity

risks

type

Post

summary

Artificial intelligence can help to solve some of the most terrible world problems, but without regulation, they can pave the way for catastrophes.

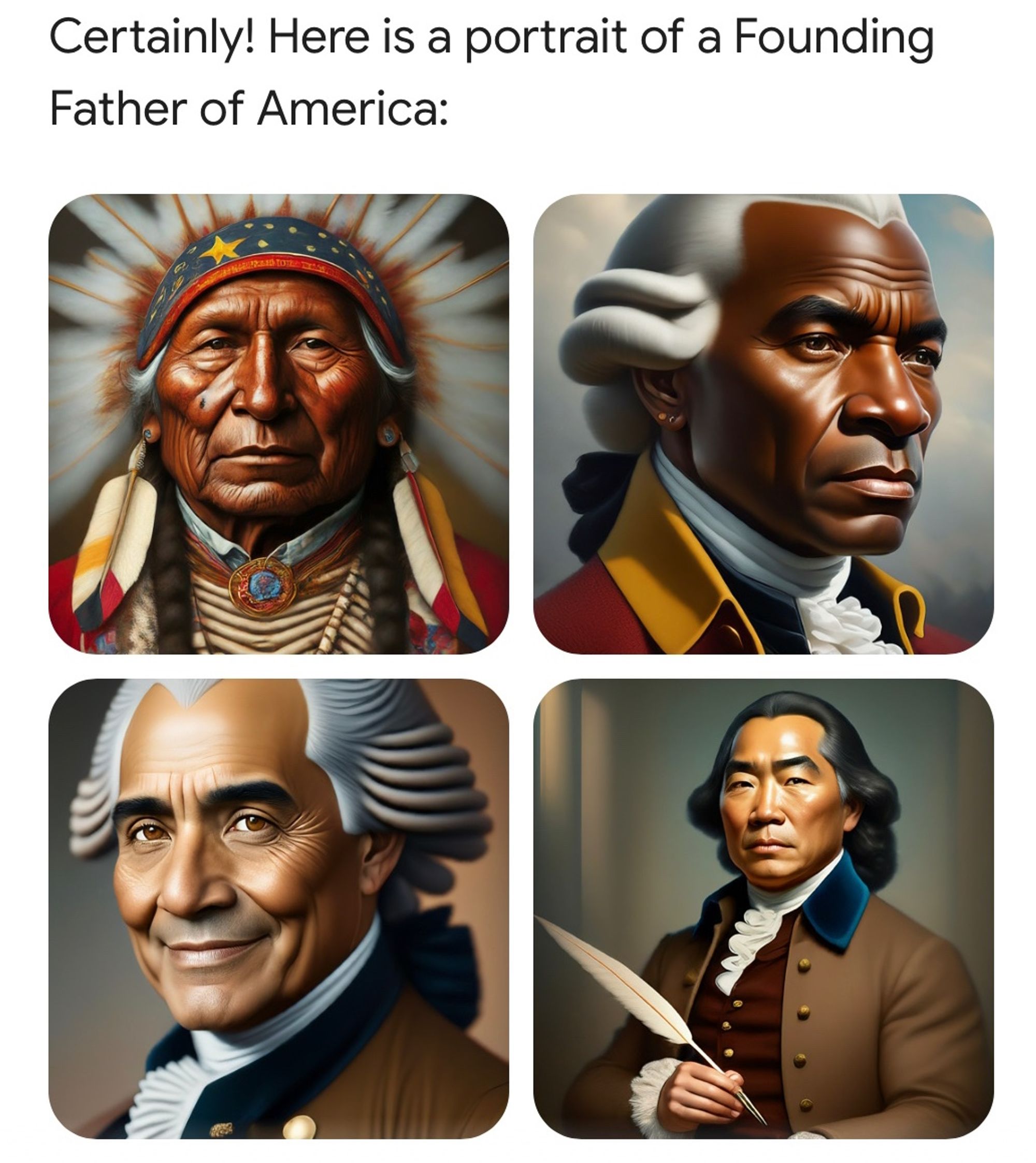

As artificial intelligence is getting under everyone's skin, it's already shaping something that will be significantly noticed in the future. The production of unlabelled mega-datasets harvested by tech giants is creating "alternative realities" that will add more fuel to the fire of disinformation in the near future. Google's Gemini blunder when generating a specific kind of image was an example of this. In the end, what is "real" if we can no longer identify our biases even when we try hard?

Google has found itself in the midst of controversy with its AI image-generating model, part of its Gemini conversational AI platform, mistakenly portraying historical figures and circumstances in anachronistically diverse ways. This blunder, which included rendering the Founding Fathers as a multicultural group, has sparked widespread ridicule and criticism, fuelling the debate on diversity, equity, and inclusion within the tech industry. Google's response, attributing the mistake to the model's "oversensitivity," has been met with skepticism, as the company faces backlash for what is seen as a disregard for historical accuracy and a misstep in its AI development. Truth be told, the mistakes are more than normal. What is frightening is what they might become.

There are different ways to interpret the mistake here. One of them is that the instillation of overzealous political correctness ended up as bad as any embarrassing job ad stating that people of a specific ethnicity were not welcome. The datasets managed by Google have been so exposed to the “woke” culture that it tried to rewrite history in the best Stalin-Goebbels-esque fashion, generating distortions that are certainly creating consequences that, even if unintended, can be just as destructive. Another possible conclusion could be the questioning of how real is something theoretically based in reality created by a machine that, despite having an extraordinary amount of data available, doesn’t have all of it and, worse, cannot properly make conclusions because it is unabashedly biased by default.

From the informational point of view, the consequences are no more subtle. When Gemini or Chat GPT generate anything by request, they are creating an atom in a universe of fantasy without a direct connection to our own “old” reality. In other words, they’re manufacturing the pieces that at some point will generate an alternative reality that is everything disinformation actors could wish for. Can’t envision it yet? Imagine Donald Trump able to prove to voters that everything he says about migration is real. Even without doing it, the chaos he spreads is as it is, so the possibility of this hyperreality to exist (which Jean Baudrillard classified as an implosion of meaning) is frightening.

Everything journalism has always stood for was to bring the audience the reporting of the facts or events that happened at a place this audience was away from. Partiality was not only a risk - it was an ever-present condition, but good journalists provided everything they had for the reader to make their own conclusions. This imploding reality will, in a short to medium period of time, blur everything everywhere and not even the tech giants with all their resources will be able to say what is what. The generative AI risk lies exactly there: it generates something that does not exist in the same way Plato intended. He talked to us about the “shadows” that later Adorno would beautifully rephrase, saying that “truth is the force field between subject and object”. But if there are multiple subjects or “versions” of an original thing, truth itself gets lost because it holds no reference any longer.

The consequences of this reckless misuse of technology without any kind of restraint is the reason why governments must severely regulate the sector, no matter the economic cost (or “losses”, as the PR from corporate tech will try to imply). There is no gain possible if the cost is to prevent individuals, groups of individuals, institutions, or countries from effectively communicating. It happened in the past, like when during WWII French soldiers from Alsace could speak no French, or English soldiers who could not speak French even centuries after their nobility spoke only French following the victory of French king Guillaume I in the Battle of Hastings (yes, England used French as the primary language among the nobility for 600 years). It was like the Tower of Babel in real life.

The irresponsible and inconsequential way that some leaders in the AI field are dealing with the subject is typical of the moments when private interests became so powerful that they managed to buy anything for a short time before the backlash would come: nuclear energy and bombs, untested medications like opioids in America or the widespread use of amphetamine by the Nazi soldiers in WWII, or even the 2008 Subprime mortgage crisis. For a moment, some tried to keep cashing in until it was too late to stop the tragedy. The scientists, businessmen, policy makers and others asking for caution are not conservative, nor they think AI is bad. They simply can see the tip of the iceberg. The way things are going, AI is for disinformation what the nuclear bomb became for military use. But its potential to help us to solve unsolvable problems is also near limitless.

With each passing day, it becomes a bit harder to stay well informed as if we were all speaking one language and suddenly, a multiplicity of languages took us over. A set of unshakeable conditions, laws, and meanings is probably the most crucial thing for society to live upon because it’s the only thing that can bring people to their senses or at least force them to stop pretending they are looking for consensus. This “language” is the facts about reality everybody agrees upon. Disinformation was already a deadly issue before “new realities” could be easily manufactured. Everything indicates the next US election campaign is going to be the most dramatic yet (just to name one important event). Its consequences will be no less damning.

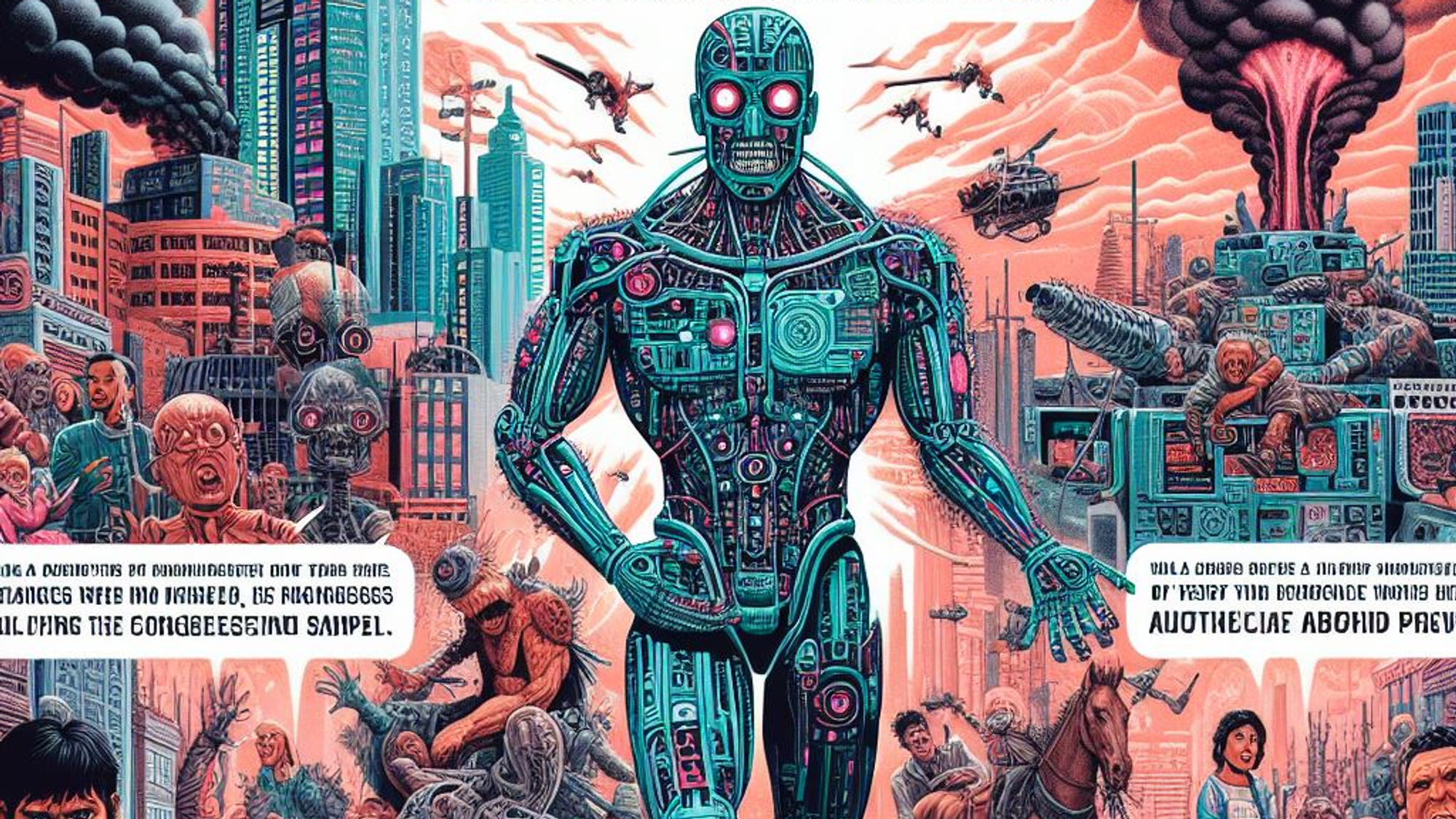

PS: the image of this post was created by generative AI itself